Virtual DFG-NSF Research Workshop on Cybersecurity and Machine Learning

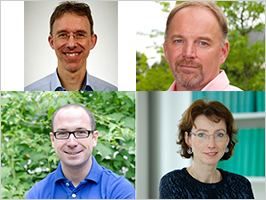

top left: Felix Freiling (© Privat), top right: Patrick McDaniel (© Patrick McDaniel/Penn State University), bottom left: Thorsten Holz (© Thorsten Holz/RUB), bottom right: Indra Spiecker (© Privat)

(05/26/21) The two-day “DFG-NSF Research Workshop on Cybersecurity and Machine Learning” opened on 17 May with over one hundred participants. The ninth in a series of interdisciplinary DFG-NSF Research Conferences organized by the National Science Foundation (NSF) and the Deutsche Forschungsgemeinschaft (DFG) since 2004, the event was originally scheduled to be held in Washington DC, last August. Due to the new pandemic-induced virtual setting, a different format and objective became necessary. Originally intended as an academic conference to promote networking among these communities in Germany and the USA, the event transformed into a workshop primarily concerned with identifying research potential at the intersection of cybersecurity and machine learning (ML), with the aim of setting down insights in a vision document.

When planning the workshop, a strong focus right from the start was the fact that cybersecurity and ML always have significant social and legal implications, and that any legitimate approach to such matters can only be developed by incorporating the perspectives of legal studies and the social sciences. A steering committee organized the event, made up of the two chairs -- Patrick McDaniel ( Pennsylvania State University) and Thorsten Holz (Ruhr University Bochum) -- along with Indra Spiecker genannt Döhmann (Goethe University Frankfurt am Main) and Felix Freiling (Friedrich Alexander University of Erlangen Nuremberg).

At a kick-off event on 25-27 January 2021, the steering committee met with a group of established experts in the research fields of cybersecurity, ML, privacy and law to identify the status of research and future research potential at the interface between the two areas. Three themes emerged “Securing ML Systems,” “Explainability, Transparency and Fairness,” and “Power Asymmetry and Privacy.” The draft vision document resulting from the event was made available to the wider academic community in Germany and the USA as a basis for discussion in the run-up to the research workshop.

At the start of the Research Workshop, DFG Vice President Kerstin Schill and Erwin Gianchandani, Senior Advisor in the Office of the Director of the NSF, emphasized the relevance of the topic to modern society. For example, although ML enables technological progress in all areas of life, its misuse can potentially endanger both individual privacy and the very foundations of democratic society. Gianchandani and Schill drew attention to the considerable investments made by the two funding organizations in the overarching research field of artificial intelligence (AI). The two noted that the complex challenges involving ML can only be solved when cooperating across disciplinary and national borders. In the words of Vice-President Schill: “It….seems to me almost imperative to scientifically illuminate the resulting technical opportunities and security threats on the one hand, and the socio-political consequences on the other.” Gianchandani addressed the increasing importance of “responsible computing,” pointing out that in addition to purely technical issues, the impact on society and people’s coexistence had to be taken into consideration, too.

© DFG

The research desiderata identified at the launch event certainly struck a chord. Representing a wide range of disciplines ranging from computer science and mathematics to law, economics, and social sciences, research workshop participants actively engaged in the writing process, taking advantage of the interactive nature of the virtual event complete with open mic and breakout sessions.

Eleven small groups discussed various topics in detail. Some participants addressed technical aspects of “federated learning” and security precautions for ML-based systems. A number of interdisciplinary subjects also featured, such as legal aspects of the security of ML applications and the emergence of power asymmetries through ML.

In the course of the discussions, it quickly became apparent to workshop participants that the overarching workshop themes – “Securing ML Systems,” “Explainability, Transparency and Fairness,” and “Power Asymmetry and Privacy” – were essentially inseparable. One participant pointed out the strong link between privacy and fairness, for instance. It emerged again and again how difficult communication can be, both between disciplines and from the point of view of different legal contexts. This was particularly evident in the notion of fairness – a concept that is difficult to grasp at a detailed level let alone in comprehensive terms, whether technically in the sense of programming or in the legal sense. This underlined the importance of providing a forum for the exchange of ideas between these diverse communities, to help initiate future joint research.

Participants engaged in discussion with great enthusiasm and the outcomes will feed into the final vision document to be handed over to the two funding organisations. What was born out of necessity due to the pandemic ultimately proved to be a very effective tool for charting a path of cooperation between the various disciplines on both sides of the Atlantic.